The Insolvency Service’s September 2018 report pulled no punches in expressing dissatisfaction over some monitoring outcomes: we want fewer promises to do better and more disciplinary penalties, seemed to be the tone. Has this message already changed the face of monitoring?

The Insolvency Service’s September 2018 Report can be found at www.gov.uk/government/publications/review-of-the-monitoring-and-regulation-of-insolvency-practitioners and its Annual Review of IP Regulation is at www.gov.uk/government/publications/insolvency-practitioner-regulation-process-review-2018.

In this article, I examine the following:

- On average, a quarter of all IPs were visited last year

- But is there a 3-yearly monitoring cycle any longer?

- 2018 saw the fewest targeted visits on record

- …but more targeted visits are expected in 2019

- No RPB ordered any plans for improvement

- Instead, monitoring penalties/referrals of disciplinary/investigation doubled

- Is this a sign that the Insolvency Service’s big stick is hitting its target?

- IPs had a 1 in 10 chance of receiving a monitoring or complaints sanction last year

How frequently are IPs being visited?

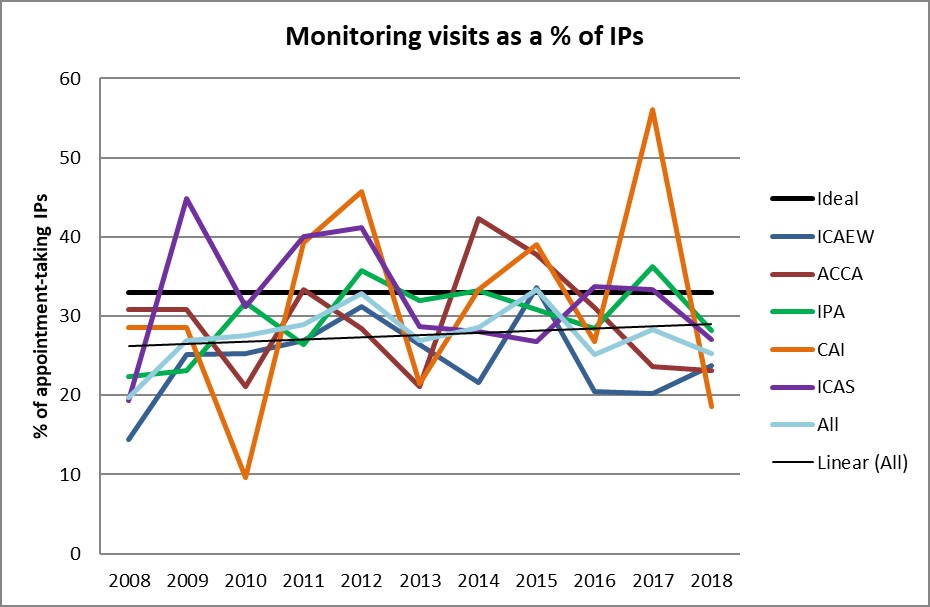

With the exception of the Chartered Accountants Ireland (which is not surprising given their bumper year in 2017), all RPBs visited around a quarter of their IPs last year. It’s good to see the RPBs operating this consistently, but how does it translate into the apparent 3-yearly standard routine?

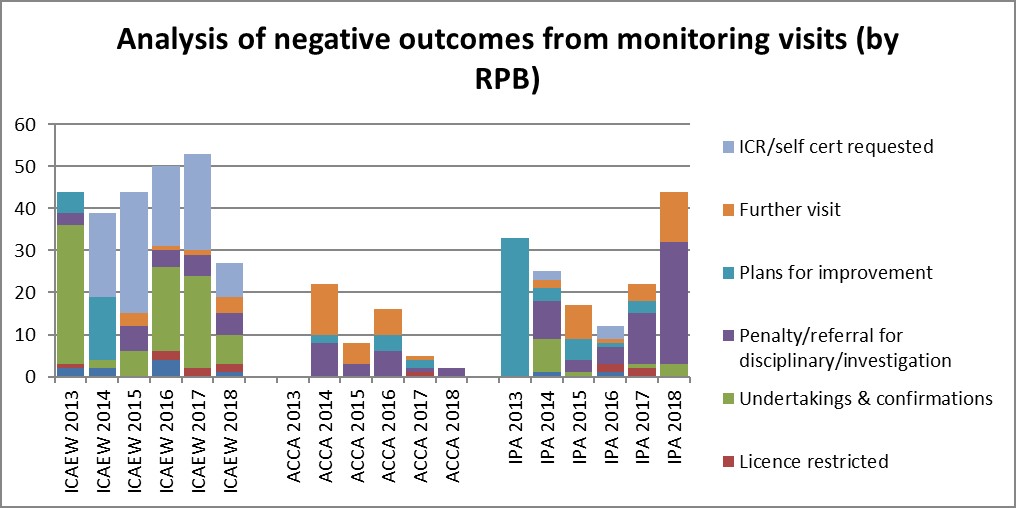

Firstly, I find it odd that coverage of ACCA-licensed IPs seems to have dropped significantly. After receiving a fair amount of criticism from the InsS over its monitoring practices, the ACCA handed the regulating of its licensed IPs over to the IPA in October 2016. Yet, the number of ACCA IPs visited since that time has dropped from the c.100% to 79%.

Another factor that I had overlooked in previous analyses is the effect of monitoring the volume IVA providers (“VIPs”). At least since 2014, the Insolvency Service’s principles for monitoring VIPs has required at least annual visits to VIPs. Drawing on TDX’s figures for the 2018 market shares in IVAs, the IPA licensed all of the IPs in the firms that fall in the InsS’ definition of a VIP. On the assumption that each of these received an annual visit, excluding these visits would bring the IPA’s coverage over the past 3 years to 56% of the rest of their IPs. Of course, there are many reasons why this figure could be misleading, including that I do not know how many VIP IPs any of the RPBs had licensed in 2016 or 2017.

The ICAEW’s 64% may also reflect its different approach to visits to IPs in the largest firms: the ICAEW visits the firm annually (to cover the work of some of their IPs), but, because of the large number of IPs in the firm, the gap between visits to each IP within the firm is up to 6 years. I cannot attempt to adjust the ICAEW’s figure to exclude these less frequently visited IPs, but suffice to say that, if they were exceeded, I suspect we might see something approaching more of a standard c.3-yearly visit for all non-large firm ICAEW-licensed IPs.

These variances in the 3-year monitoring cycle standard, which cannot be calculated (by me at least) with any accuracy, mean that there is very little that can be gleaned from this graph. Unfortunately, the average is no longer much of an indication to IPs of when they might expect to receive their next monitoring visit.

The IPA’s new approach to monitoring

In addition to its up-to-4-visits-per-year shift for VIPs, at its annual conference earlier this year, the IPA announced that it would also be departing from the 3-yearly norm for other IPs.

The IPA has published few details about its new approach. All that I have seen is that the frequency of monitoring visits is on a risk-assessment basis (which, I have to say, it was in my days there, albeit that the InsS used to insist on a 3-year max. gap) and that it is a “1-6 year monitoring cycle – tailored visits to types of firm” (the IPA’s 2018/19 annual report).

In light of this vagueness, I asked a member of the IPA secretariat for some more details: was the plan only to extend the period for those in the largest firms, as the ICAEW has done, or at least only for those practices with robust in-house compliance teams with a proven track record? The answer was no, it could apply to smaller firms. He gave the example of a small firm IP who only does CVLs: if the IPA were happy that the IP could do CVLs well and her bond schedules showed that she wasn’t diversifying into other case types, she likely would be put on an extended monitoring cycle. The IPA person saw remote monitoring as the key for the future; he said that there is much that can be gleaned from a review of docs filed at Companies House. He explained, however, that IPs would not know what cycle length they had been marked up for.

While I do not wish to throw cold water on this development, as I have long supported risk-based monitoring, this does seem a peculiar move especially in these times when questions are being asked about the current regulatory regime: if a present concern is that the regulators are not adequately discouraging bad behaviour and that they are not expediting the removal of the “bad apples”, then it is curious that the monitoring grip is being loosened now.

Also, now that I visit clients on an annual basis, I realise just how much damage can be done in a short period of time. It only takes a few misunderstandings of the legislation, a rogue staff member or a hard-to-manage peak in activity (or an unplanned trough in staff resources) to result in some real howlers. How much damage could be done in 6 years, especially if an IP were less than honest? Desk-top monitoring can achieve only so much.

What this means for my analysis of the annual reports, however, is that the 3-year benchmark for monitoring visits – or one third of IPs being monitored per year – is no longer relevant ☹ But it will still be interesting to see how the averages vary in the coming years.

Targeted visits drop to an all-time low

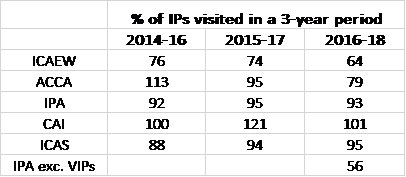

Only 10 targeted visits were carried out last year – the lowest number since the InsS started reporting them – and it seems that all RPBs are avoiding them in equal measure.

But 2019 may show a different picture, as several targeted visits have been ordered from 2018 monitoring visits…

Are the Insolvency Service’s criticisms bearing fruit?

I was particularly alarmed by the overall tone of the Insolvency Service’s “review of the monitoring and regulation of insolvency practitioners” published in September 2018. In several places in the report, the InsS expressed dissatisfaction over some of the outcomes of monitoring visits.

I got the feeling that the Service disliked the focus on continuous improvement that, I think, has been a strength of the monitoring regime. Instead, the Service expected to see more investigations and disciplinary actions arising from monitoring visit findings. The report singled out apparently poor advice to debtors and apparently unfair or unreasonable fees or disbursements as requiring a disciplinary file to be opened with the aim of remedies being ordered. It does seem that the focus of the InsS criticisms is squarely on activity in the VIPs, but the report did worry me that the criticisms could change the face of monitoring for everyone.

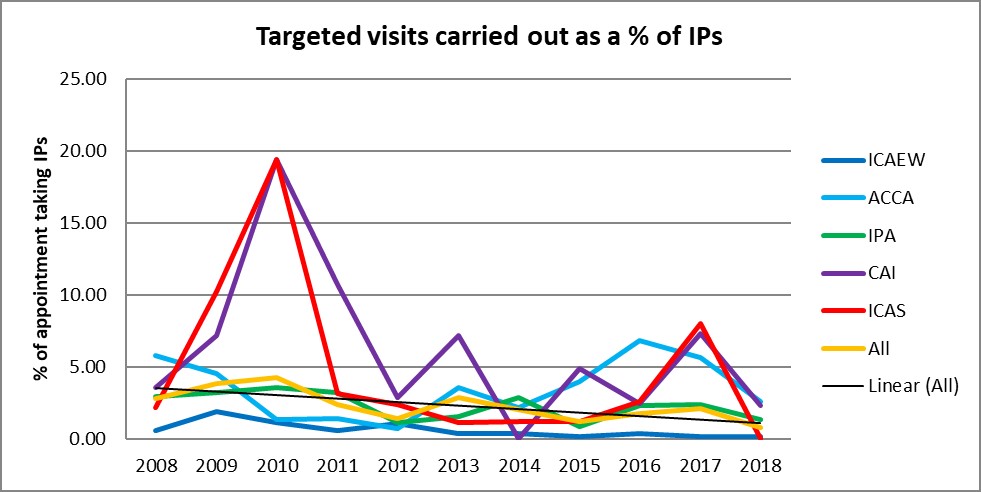

2018 is the first year (in the period analysed) in which no monitoring visit resulted in a plan for improvement. On the other hand, the number of penalties/referrals for disciplinary/investigation action doubled.

Could the InsS’ report be responsible for this shift? Ok, the report was published quite late in 2018, in September, but I am certain that the RPBs had a rough idea of what the report would contain long before then. Or perhaps the Single Regulator debate has tempted some within the RPBs/committees to be seen to be taking a tougher line? Or you might think that these kinds of actions are long overdue?

I think that the RPBs have tried hard over the last decade or so to overcome the negativity of the JIMU-style approach to monitoring. In more recent years, monitoring has become constructive and there has been some commendably open and honest communication between RPB and IP. This has helped to raise standards, to focus on how firms can improve for the future, rather than spending everyone’s time and effort analysing and accounting for the past. It concerns me that the InsS seems to want to remove this collaborative approach and make monitoring more like a complaints process. In my view, such a shift may result in many IPs automatically taking a more defensive stance in monitoring visits and challenging many more findings. Such a shift will not improve standards and will take up much more time from all parties.

Getting back to the graph, of course a referral for an investigation might not result in a sanction at all, so this does not necessarily mean that the IPA has issued more sanctions as a consequence of monitoring visits. Also, the IPA’s apparent enthusiasm for this tool may simply reflect the IPA’s (past) committee structure whereby the committee that considered monitoring reports did not have the power to issue a disciplinary penalty, but could only pass it on to the Investigation Committee. As this was dealt with as an internal “complaint”, I suspect that any such penalty arising from this referral would have featured, not in the IPA’s monitoring visit outcomes, but in complaint outcomes.

So how do the RPBs compare as regards complaints sanctions?

Complaints sanctions fall by a quarter

Although the IPA issued relatively fewer sanctions last year, I suspect that the monitoring visit referrals will take some time to work their way through to sanction stage, so it is unlikely that this demonstrates that the monitoring visit referrals led to a “no case to answer”.

What this and the previous graph show quite dramatically, though, is that last year the ICAEW seemed to issue far fewer sanctions per IP than the IPA. As mentioned in my last blog, the IPA does license a large majority of the VIP IPs and there were more complaints last year about IVAs than about all the other case types put together. One third of the published sanctions also were found against VIP IPs.

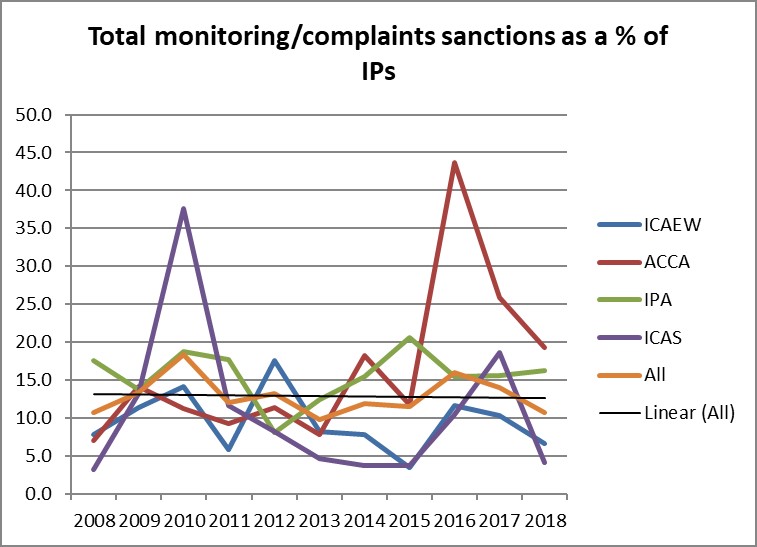

Likelihood of being sanctioned is unchanged from a decade ago

In 2018, you had a 1 in c.10 chance of receiving an RPB sanction, which was the same probability as in 2008…

I find it interesting to see the IPA’s and the ACCA’s results converge, which, if it were not for the suspected VIP impact, I would expect given that the IPA deals with both RPBs’ regulatory processes.

There’s not a lot that can be surmised from the number of sanctions issued by the other two RPBs: they’re a bit spiky, but it does seem that, on the whole, the ICAEW and ICAS has issued much fewer sanctions. It seems from this that, at least for last year, you were c.half as likely to receive a sanction if you were ICAEW- or ICAS-licensed as you were if you were IPA- or ACCA-licensed.

Is a Single Regulator the answer to bringing consistency?

True, these graphs do seem to indicate that different regulatory approaches are implemented by different RPBs. However, I do think that some of that variation is due to the different make-up of their regulated populations. There is no doubt that the IVA specialists do require a different approach. To a lesser degree, I think that a different approach is also merited when an RPB monitors practices with robust internal compliance teams; it is so much more difficult to have your work critiqued and challenged on a daily basis when you work in a 1-2 IP practice.

Differences in approach can also be a good thing. Seeing other RPBs do things differently can force an RPB to challenge what they themselves are doing and to innovate. My main concern with the idea of a single regulator is the loss of this advantage of the multi-regulator structure.

Perhaps a Single Regulator could bring in more consistency, but it would never result in perfectly consistent outcomes. I’m sure I’m not the only one who remembers an exercise a certain JIEB tutor ran: all us students were given the same exam answer to mark against the same marking guide. The results varied wildly. This demonstrated to me that, as long as humans are involved in the process, different outcomes will always emerge.